AI / RAG Pipeline

CogniGen FAQ Chatbot

Document-based FAQ chatbot with RAG pipeline, multi-tenant vector search, and confidence-scored responses.

Overview

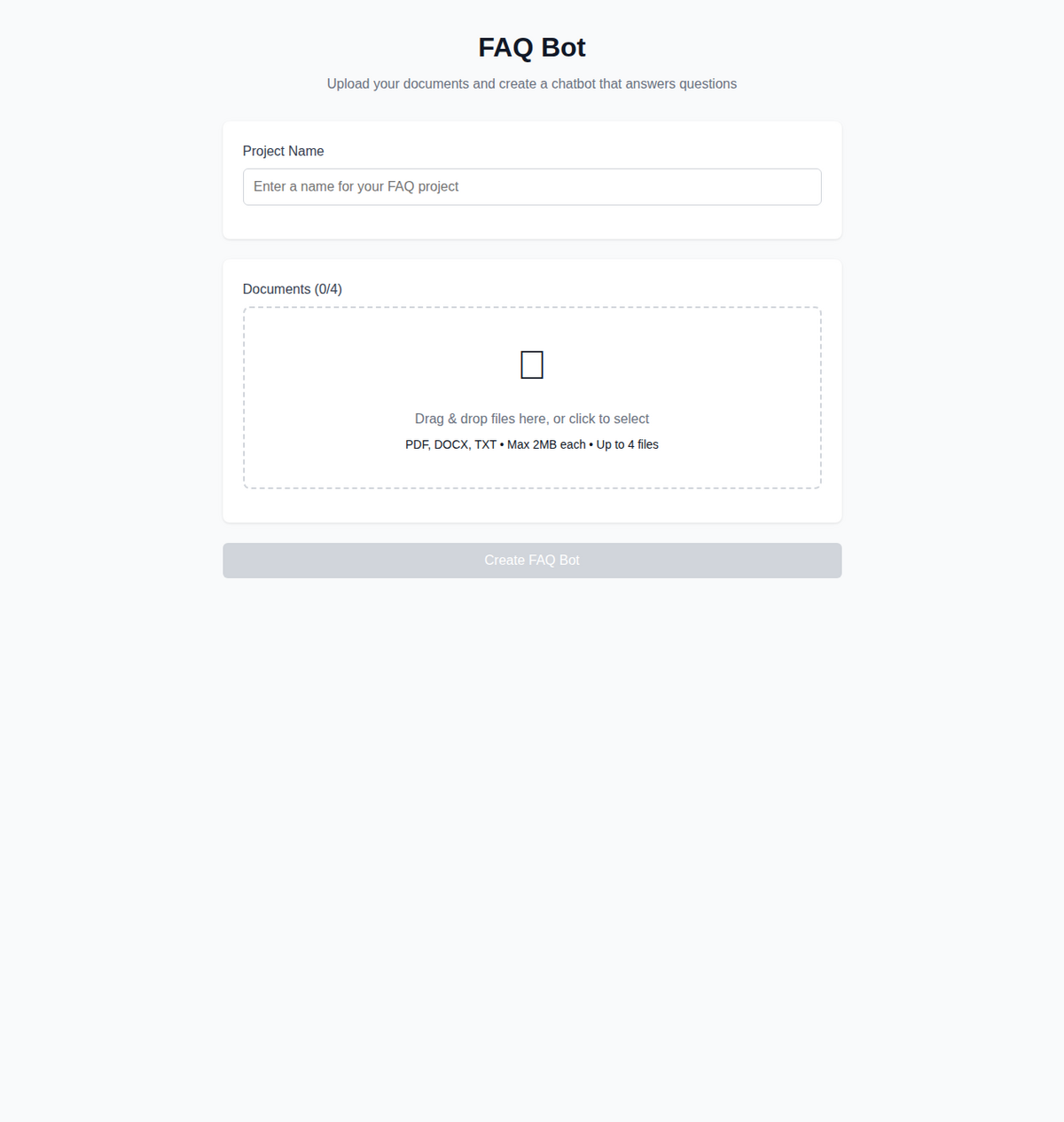

CogniGen FAQ Chatbot is a production RAG chatbot built for real business document Q&A. The system ingests PDF, DOCX, and TXT documents, chunks them with semantic coherence, embeds via OpenAI, and stores in ChromaDB with per-organization vector isolation.

The RAG pipeline uses distance-based confidence scoring (high/medium/low/none) to transparently indicate retrieval quality. When retrieved context isn't strong enough, the system says so instead of hallucinating. Each tenant's documents are isolated in dynamic ChromaDB collections using a `project_{id}` naming pattern.

Background document processing handles ingestion with status tracking (pending/processing/completed/failed), rate-limited API endpoints protect against abuse, and a React SPA provides the chat and document management interface.

The platform includes a companion Productivity Assistant module that routes natural language requests through keyword-based intent classification to four specialized services (meeting planner, email drafter, task breakdown, followup extractor), generating downloadable artifacts in multiple formats — ICS calendar invites, CSV task lists, JSON data, and Markdown action items — using regex-based structured output extraction from LLM responses.

Hard Problems

Challenge

Multi-tenant vector store isolation — documents from Organization A must never appear in Organization B's search results.

Solution

Dynamic ChromaDB collection naming with `project_{id}` pattern. Each tenant gets its own vector space with independent embedding indices.

Challenge

Preventing LLM hallucinations when retrieved context is insufficient.

Solution

Distance-based confidence scoring (high/medium/low/none) using average embedding distances and chunk counts. System prompt enforces context-only responses with source citations.

Challenge

Processing diverse document types while preserving semantic coherence for embedding.

Solution

Integrated PyPDF2, python-docx, and LangChain RecursiveCharacterTextSplitter with 1000-char chunks and 200-char overlap. Background task processing with status tracking.

Challenge

Reliably extracting structured data from free-form LLM text output for artifact generation.

Solution

Regex-based markdown code block parser with type markers (meeting_json, tasks_json). LLM prompted for specific format, parser extracts and validates typed objects.

Key Decisions

| Decision | Chose | Over | Because |

|---|---|---|---|

| Vector database | ChromaDB | Pinecone | Local-first deployment, zero external dependencies, HNSW indexing out of the box. No API costs for vector operations. |

| Task processing | FastAPI background tasks | Celery | Simpler deployment, no Redis/RabbitMQ dependency. Document ingestion workload doesn't justify distributed task queue. |

| Embedding model | OpenAI text-embedding-3-small | Sentence Transformers | Production-grade quality, consistent with LLM provider, cost-efficient for the retrieval workload. |